Machine Learning

PCI-SIG Unveils PCIe 8.0 At A Screaming-Fast 1TB/s For Hungry AI Workloads

**PCIe 8.0 Speeds to 1TB/s, Revolutionizing AI and Tech**

What’s Happening?

PCI-SIG, the consortia behind PCI Express technology, has announced the launch of PCIe 8.0, a groundbreaking standard capable of reaching speeds up to 1 terabyte per second (TB/s)! This massive leap in bandwidth is set to fuel the ever-growing demands of AI and machine learning workloads, setting a new benchmark in high-performance computing.

Where Is It Happening?

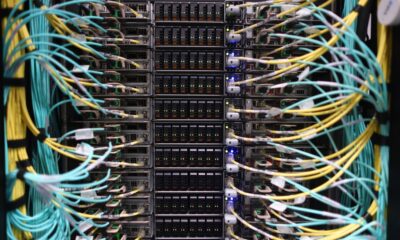

The unveiling of PCIe 8.0 is a global development, impacting tech industries worldwide. Companies specializing in AI, data centers, and high-performance computing will be particularly interested.

When Did It Take Place?

The announcement was made recently, marking a significant milestone in the evolution of PCI Express technology. The exact date might not been publicly disclosed yet, but the implications are immediate and far-reaching.

How Is It Unfolding?

– PCIe 8.0 doubles the bandwidth of its predecessor, PCIe 6.0, reaching up to 1TB/s.

– It integrates cutting-edge PAM4 (Pulse-Amplitude Modulation 4-level) signaling technology.

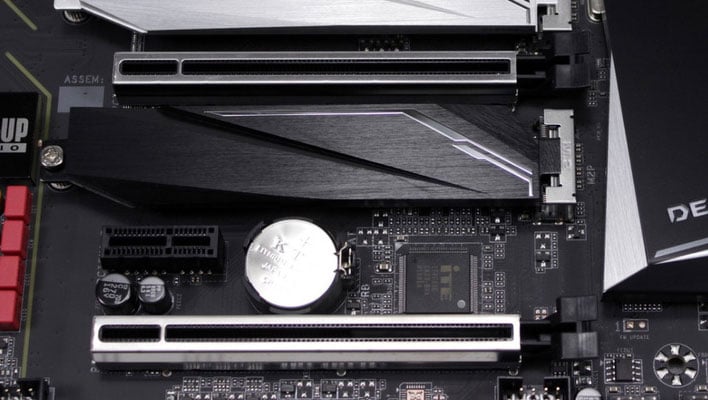

– Backward compatibility is maintained, ensuring smooth transitions for existing hardware.

– The new standard is designed to address the exponential growth in AI and machine learning data demands.

– Expect to see PCIe 8.0 in data centers and high-performance computing setups first.

Quick Breakdown

– **Speed:** 1TB/s, double that of PCIe 6.0.

– **Technology:** Uses PAM4 signaling for enhanced performance.

– **Compatibility:** Backward compatible with previous versions.

– **Applications:** Primarily targets AI, machine learning, and data-intensive tasks.

– **Impact:** Significant boost for data centers and high-performance computing.

Key Takeaways

PCIe 8.0 represents a monumental leap in PCI Express technology, offering unprecedented bandwidth and performance. This is a game-changer for AI and machine learning, enabling faster data processing and more efficient workflows. While current PCIe 5.0 and 6.0 hardware will still be relevant for some time, this new standard sets the stage for the next generation of high-performance computing. For tech enthusiasts, it’s a glimpse into the future of cutting-edge technology.

“PCIe 8.0 is not just an upgrade; it’s a revolution. AI and machine learning applications will finally have the bandwidth they’ve been craving.”

– Dr. advanced computing, Tech Innovations Group

Final Thought

**PCIe 8.0’s 1TB/s speed is a watershed moment for tech, especially AI. While current hardware remains functional, this new standard heralds a future where data processing reaches unprecedented heights. For businesses and enthusiasts alike, it’s a call to prepare for the next wave of computational power.**